Critical Thinking & AI

OK, it’s no secret with anyone who knows me that I am both pretty capable of managing technologies to get the job done, and I am simultaneously an occasional wannabe luddite. I simply find that pen and paper can be so much easier sometimes (says the woman tippy-tapping on a computer). I am also challenged that I enjoy being able to create and do things on my own, so I don’t naturally embrace technologies that take over for me.

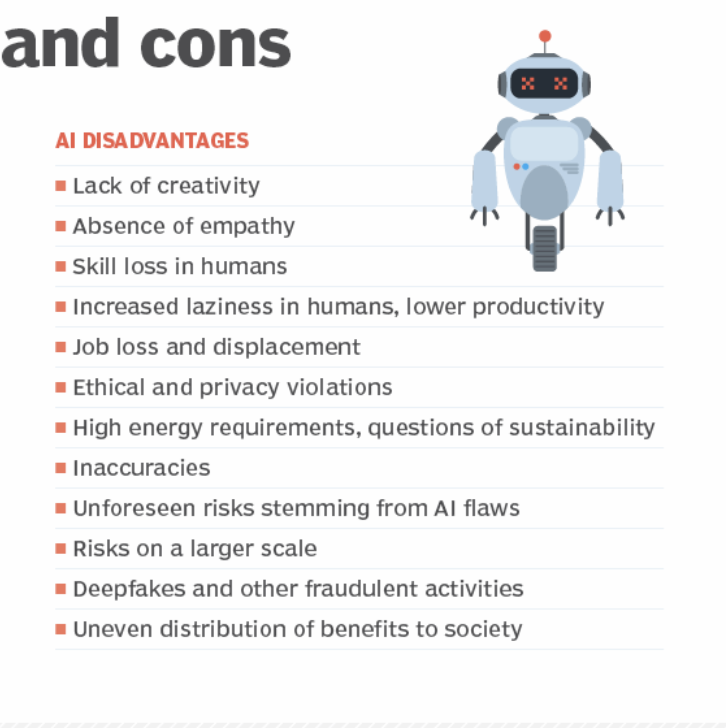

Enter Artificial Intelligence (AI). As an artist, creative writer, and photographer, I have a boatload of ethical issues with respect to how AI sources material. For the moment however, I will put that topic aside. Instead, I would like to examine a few concerns in the use of AI in research and education. I will start with this article from TechTarget which cites some pretty typical pros and cons of AI.

Pros & Cons of AI according to TechTarget

What is the Purpose of AI?

Responses like “AI is to benefit society,” or “it is to enhance and improve various aspects of human life” provide an idealistic perception of what AI’s purpose is. I love those goals and sincerely hope we get there, but I believe they’re currently naive. The idea that AI is here to improve the human condition is great marketing, but the reality is that AI was created by developers because they could, and was funded by commercial interests because they want to make money. The utopian concept that AI is here for any non-commercially based scientific, social or humanistic reasons - a la Star Trek - was not sufficiently considered in the creation of current AI systems and is yet to be coded. Some of the reservations I have about AI are reflected in this less talked about perspective, although it is here in the Stanford Social Innovation Review, Generative AI is All About the Money:

Dr. S. Craig Watkins provides some great food for thought in his arguments about what has thus far been missing - and is desperately needed - the contributions of social scientists, humanists, designers, ethicists, and subject matter experts, before we implement AI systems that we do not yet fully comprehend:

Why we still need Critical Thinking when using AI

Returning to the expected advantages of AI, among those that TechTarget listed were improved accuracy, unbiased decision making, democratization of knowledge, and expanded access to expertise. However, in her article The Blinking Cursor: Navigating Storytelling, Creativity, and Writing in the Advent of Generative AI, Natalie Anklesaria discusses why critical thinking by humans is necessary when using AI. She raises several issues:

Hallucination

There is likely both accurate and inaccurate information available for data scraping, but there is a possibility that AI could be providing only the biased or incorrect information. Accurate information may be available on the web, however, “since generative AI algorithms produce output based on statistical patterns,” AI may be burying the accurate information to regurgitate the higher volume of inaccurate information.

Built in Bias

Many large language models (LLM)s base their information on web scraping, which “eventually format output based on statistical connections between words”. Anklesaria points out that if the source material being scraped is biased, does not include diversity, or does not come from inclusive spaces, then the output will have “voices and perspectives missing, right from their inception.”

Coded Bias

Anklesaria quotes Meredith Broussard’s argument that “AI favours replicating and recycling, which could stagnate social process.” Further, Anklesaria discusses the multiple ways that AI could be encouraging writing that is “hegemonic,” (voicing only the ruling or dominant group in a political or social context), or that is written in perfect but bland grammar that lacks individuality or personality.

The Black Box Problem

If we acknowledge that there is a possibility that generative AI programs may provide inaccurate or biased output, then “learners need the ability to understand the topic itself” so that they have the skills to sift through what could be inaccurate or biased output. If they lack these skills, or assume the AI output is correct without critically thinking about it, then the user is using incorrect information.

My concern is that people are expecting AI to produce information that has improved accuracy, is unbiased, that democratizes knowledge, and expands access to expertise. However, as Anklesaria points out, it is highly possible current AI outputs are not meeting these assumed advantages. There is a high risk that users are blindly trusting AI output without thinking critically about it, and that could have serious ramifications.

The So What?

Despite my reservations, I cannot deny the incredible potential behind AI. My concern is that AI is being embraced as an authority, heralded as more accurate and less biased than humans - especially as a research resource and tool for analysis or decision making. There is a perspective that AI output is infallible, and that is simply not true. AI users continue to have a responsibility to question everything and exercise critical thinking. Additionally, to address what is known in the AI community as an “alignment problem,” we need to examine how AI systems are built with additional experts at the table. These people are needed to help design the guardrails, policies, and ethical principles that are not yet in place . I get that the education world wants to be on the cutting edge of technological advances like AI, however, until these issues come to the forefront for discussion, resolutions will not be achieved. Further, it is dangerous to implement AI systems before resolving these issues, yet it appears that AI systems are being pushed into use without even identifying that issues exist.

So, those are my thoughts on this much written-about discussion. Thanks for chatting, and I will post again soon!

~ Jennifer

References:

Anklesaria, N. (2024, 16 October). The blinking cursor: Navigating storytelling, creativity, and writing in the advent of generative AI. Academica Forum. https://forum.academica.ca/forum/the-blinking-cursor?utm_medium=email&utm_campaign=Academica-Top-Ten---Thurs%2C-Oct-17%2C-2024&utm_source=Envoke-%2AAcademica-Top-Ten-Daily-Newsletter&utm_term=Today%27s-Top-Ten-in-Higher-Ed

Fruchterman, J. (2024, January 25). Generative AI is all about the money. Stanford Social Innovation Review. DOI: 10.48558/wda8-7059

Oxford Languages Dictionary. (n.d.) Hegemonic. In Google. Retrieved October 26, 2024. https://www.google.com/search?client=safari&sca_esv=f23d37099304cbcf&rls=en&sxsrf=ADLYWILMvhZNOBxcSc-Arw2P3RxrEgjQCg:1729976960854&q=hegemonic&si=ACC90nytWkp8tIhRuqKAL6XWXX-NnKhZ1V09IW38PwfRXYSW-2H0DDPXwrGYEOLTwpXpwqU7oMJnx56Y1AKs12QNr9u57z4Q9fJhLCRAlO0DNLZy3ybwTlk%3D&expnd=1&sa=X&ved=2ahUKEwjjsOKx-qyJAxUMMTQIHUj7DJQQ2v4IegQIHhAS&biw=1654&bih=1137&dpr=2

Pratt, M.K., (2024, September 19). 24 advantages and disadvantages of AI. TechTarget. https://www.techtarget.com/searchenterpriseai/tip/Top-advantages-and-disadvantages-of-AI

Watkins, S.K. (Guest). (2024, April 23). Why AI’s potential to combat or scale systemic injustice still comes down to humans. In Unlocking Us. https://brenebrown.com/podcast/why-ais-potential-to-combat-or-scale-systemic-injustice-still-comes-down-to-humans/